ams OSRAM – Coffee cup or travel mug? New high-resolution dToF sensor sees the difference

Premstaetten, Austria and Munich, Germany (August 27, 2025) — The TMF8829 direct Time-of-Flight (dToF) sensor significantly increases resolution — from the previously common 8×8 zones to 48×32 — and it is designed to detect subtle spatial differences and distinguish closely spaced or slightly varied objects.

The new sensor from ams OSRAM can tell whether an espresso cup or a travel mug is placed under a coffee machine, ensuring the right amount is dispensed every time. This kind of precision is critical for a broad spectrum of applications: from logistics robots that distinguish between nearly identical packages, to camera systems that maintain focus on moving objects in dynamic video scenes.

“The new dToF sensor supports precise 3D detection and differentiation in diverse applications — without a camera and with stable performance across varying targets, distances, and environmental conditions,” says David Smith, Product Marketing Manager at ams OSRAM.

New benchmark for dToF technology

With dToF technology, the sensor emits light pulses in the invisible infrared range. These pulses reflect from objects in the sensor’s field of view and return to the sensor, which calculates the distance based on the time it takes for the light to travel — similar to how the delay of an echo reveals distance: the longer it takes, the farther away the object. Multi-zone sensors enhance this by capturing reflected light from multiple viewing angles (zones), like a network of echo points. This enables the creation of detailed 3D depth maps.

The TMF8829 divides its field of view into up to 1,536 zones — a significant improvement over the 64 zones in standard 8×8 sensors. This higher resolution enables finer spatial detail. For example, it supports people counting and presence detection in smart lighting systems, object detection and collision avoidance in robotic applications, and intelligent occupancy monitoring in building automation. The detailed depth data also provides a foundation for machine learning models that interpret complex environments and enable intelligent interaction with surroundings.

Measuring just 5.7 × 2.9 × 1.5 mm— thinner than a cent coin and more compact than typical sensors with lower resolution — the TMF8829 delivers high resolution in a format ideal for space-constrained devices. Because it operates without a camera, it supports privacy-sensitive applications. When paired with a camera, the sensor enables hybrid vision systems like RGB Depth Fusion, combining depth and color data for AR applications such as virtual object placement.

Classified as a Class 1 eye-safe device, the TMF8829 uses a dual VCSEL (Vertical Cavity Surface Emitting Laser) light source to measure distances up to 11 meters with 0.25 mm precision — sensitive enough to detect subtle movements like a finger swipe. With its 48×32 zones, the sensor covers an 80° field of view, delivering depth information across a scene comparable to that of a wide-angle lens. On-chip processing reduces latency and simplifies integration. Instead of relying on a single signal, the sensor builds a profile of returning light pulses to identify the most accurate distance point — ensuring stable performance even with smudged cover glass. Full histogram output supports AI systems in extracting hidden patterns or additional information from the raw signal.

The consistent commitment of ams OSRAM to innovation ensures that the company remains at the forefront of technological advancements. This is highlighted by the company’s strong IP portfolio comprising more than 1000 patent rights and other IP rights in the field of VCSEL and 3D sensing technologies.

Further information

The TMF8829 will be available in Q4 2025. More information on the TMF8829 can be found here.

The TMF8829 direct Time-of-Flight (dToF) sensor is designed to detect subtle spatial differences and distinguish closely spaced or slightly varied objects.

Image: ams OSRAM

Sourceams OSRAM

EMR Analysis

More information on OSRAM Light AG: See the full profile on EMR Executive Services

More information on ams-OSRAM Group: See the full profile on EMR Executive Services

More information on Aldo Kamper (Chairman of the Management Board and Chief Executive Officer, ams-OSRAM + Chief Executive Officer, OSRAM Licht AG): See the full profile on EMR Executive Services

More information on TMF8829 Direct Time-of-Flight (dToF) Sensor by ams-OSRAM: https://ams-osram.com/products/sensor-solutions/direct-time-of-flight-sensors-dtof/ams-tmf8829-48×32-multi-zone-time-of-flight-sensor + The TMF8829 is a direct time-of-flight (dToF) sensor with configurable resolution of 8×8, 16×16, 32×32 & 48×32. The device is based on SPAD, TDC, and histogram technology and achieves a detection range of up to 11000 mm with an 80° field of view. All processing of the raw data is performed on-chip, and the TMF8829 provides distance information along with confidence values, signal amplitude, and ambient light on its interface. Internally, the TMF8829 uses dToF histograms and peak detection, and therefore, it is highly tolerant to smudges on the cover glass. Additionally, the TMF8829 can handle multiple objects per depth point simultaneously without degrading accuracy.

More information on David Smith (Senior Product Manager, Direct Time of Flight, ams-OSRAM): See the full profile on EMR Executive Services

EMR Additional Notes:

- Direct Time-of-Flight (dToF) Sensor:

- Direct Time-of-Flight (dToF) is a prominent depth sensing method in Light Detection and Ranging (LiDAR) applications. It is a type of distance sensor that works by directly measuring the time it takes for a single pulse of light to travel from the sensor, reflect off an object, and return to a sensor.

- Time-of-Flight (ToF) sensors are used for a range of applications, including robot navigation, vehicle monitoring, people counting, and object detection. ToF distance sensors use the time that it takes for photons to travel between two points to calculate the distance between the points.

- Infrared and X-Ray:

- Infrared (IR): Infrared is a type of electromagnetic radiation with a wavelength longer than visible light, but shorter than microwaves. It sits on the lower-energy side of the visible spectrum.

- Wavelength: The wavelength ranges from approximately 700 nm to 1 mm.

- Source: It’s produced by any hot body or molecule, including humans, animals, and the sun.

- X-Ray: X-rays are a type of electromagnetic radiation with a very short wavelength and therefore a very high energy level. They sit on the high-energy side of the spectrum, beyond ultraviolet light.

- Wavelength: The wavelength ranges from approximately 10 nm to 0.01 nm.

- Source: They are typically produced by bombarding a metal target with high-energy electrons.

- Sources of Rays:

- Visible rays are emitted or reflected by objects.

- Infrared rays are produced by hot bodies and molecules.

- Ultraviolet rays are produced by special lamps and very hot bodies, like the sun.

- X-rays are produced by bombarding a metal target with high-energy electrons.

- Infrared (IR): Infrared is a type of electromagnetic radiation with a wavelength longer than visible light, but shorter than microwaves. It sits on the lower-energy side of the visible spectrum.

- RGB – RGBCCT – RGBW – RGBWWW- RGBIC:

- RGB (Red, Blue and Green): RGB LED products combine these three colours to produce over 16 million hues of light. Note that not all colours are possible. Some colours are “outside” the triangle formed by the RGB LEDs. Also, pigment colours such as brown or pink are difficult, or impossible, to achieve.

- While RGB can produce color that is close to white, a dedicated white LED chip provides a pure white tone that is better for task and accent lighting when color is not needed. The extra white chip is a feature of RGBW or RGBCCT strips, not standard RGB strips.

- RGBCCT (RGB + Correlated Color Temperature): RGBCCT consists of RGB and CCT. CCT (Correlated Color Temperature) means that the color temperature of the led strip light can be adjusted to change between warm white and cool white. Thus, RGBCCT is a more precise term than RGBWW strip light.

- RGBW (RGB + White): RGBW is an LED lighting technology that uses four subpixels (red, green, blue and white). In an RGB strip, white is produced by illuminating R, G and B LEDs at their highest intensity; however, the color filters diminish some of the light. RGBW produces a brighter white because the white LED is not filtered.

- RGBWW (RGB + White + Warm White): RGBWW LED lights use a 5-in-1 LED chip with red, green, blue, a cool white and a warm white for color mixing. The only difference between RGBW and RGBWW is that RGBW typically has a single white LED chip, while RGBWW has a dedicated warm white chip and a dedicated cool white chip. RGB lights with extra warm white create a softer yellow-white color.

- RGBIC (RGB + Integrated Circuit): RGBIC stands for Red, Green, Blue, Integrated Circuit. An RGBIC strip light can do everything an RGB light strip can. It can produce red, green, and blue lights alongside any combination of the three. But it also comes equipped with multiple integrated circuits on the strips. Compared to RGB strip lights, the RGBIC strip lights have an added color chasing mode, and with a unique built-in IC chip, the RGBIC strip lights are able to display multi-colors at one time like a rainbow or aurora. RGB can only display one color at a time while RGBIC can display multiple colors at the same time.

- RGB (Red, Blue and Green): RGB LED products combine these three colours to produce over 16 million hues of light. Note that not all colours are possible. Some colours are “outside” the triangle formed by the RGB LEDs. Also, pigment colours such as brown or pink are difficult, or impossible, to achieve.

- VR/AR/ER (Virtual Reality/Augmented Reality/Extended Reality):

- Augmented Reality (AR): Adds digital elements to a live view often by using the camera on a smartphone. Examples of augmented reality experiences include Snapchat lenses and the game Pokemon Go.

- Virtual Reality (VR): Implies a complete immersion experience that shuts out the physical world.

- AR vs. VR:

- Setting: AR uses a real-world setting while VR is completely virtual.

- User Interaction: AR users are present in the real world while interacting with digital content; VR users are immersed in a simulated environment controlled by the system.

- Device: VR requires a headset device, but AR can be accessed with a smartphone.

- Purpose: AR enhances or augments the real world with digital information, while VR creates a separate, fictional reality for the user to experience.

- Extended Reality (XR): Umbrella term encapsulating Augmented Reality (AR), Virtual Reality (VR), Mixed Reality (MR), and everything in between. Although AR and VR offer a wide range of revolutionary experiences, the same underlying technologies are powering XR.

- Laser Class 1 “eye-safe-device”:

- The “Class 1” designation is the lowest and safest category in the international laser safety classification system (established by standards like IEC 60825-1).

- The emitted radiation from a Class 1 device is so low that it is well below the Maximum Permissible Exposure (MPE) limit for the human eye. This means that even if a person were to stare directly into the beam, the power output is not strong enough to cause any harm, even over a long period.

- Common examples of Class 1 eye-safe devices include:

- Laser printers

- CD and DVD players

- Barcode scanners

- Some LiDAR sensors used in robotics and autonomous vehicles

- Laser pointers with very low output power

- Laser Diode:

- A laser diode is a semiconductor device that generates light through stimulated emission.

- Unlike an LED which relies on spontaneous emission, a laser diode requires an optical cavity to amplify the light. This amplification is achieved by injecting current between two parallel mirrors, which causes a chain reaction of light emission, resulting in a highly coherent light beam.

- EEL (Edge-Emitting Laser):

- In an edge-emitting laser (EEL), light is emitted from the edge of the substrate. A solid-state laser is grown within a semiconductor wafer, with the optical cavity parallel to the surface of the wafer. The wafer is cleaved at both ends and coated with a mirror to create the laser.

- VCSEL (Vertical-Cavity Surface-Emitting Laser):

- VCSELs were first used in the telecom industry, and today are widely used as light sources in sensing applications. Low power (mW) applications include face and gesture recognition, proximity sensors, augmented reality displays. High power (Watts) applications include LIDARs for robotics, UAVs, and autonomous vehicles.

- A VCSEL is a semiconductor laser diode with laser beam emission perpendicular from the top surface, as opposed to a conventional edge-emitting laser (EEL) which emits from its sides.

- Compared to an LED, a VCSEL has a much narrower and more focused beam angle. This means that for a lower current, the usable optical power from a VCSEL is significantly greater than an LED’s, as the light is directed more efficiently.

- Chip, Computer Chip and Integrated Circuit (IC):

- An Integrated Circuit (IC) is the technical term for a complex electronic circuit built on a small, flat piece of semiconductor material (most commonly silicon). This single component contains hundreds to billions of interconnected transistors, resistors, and capacitors.

- Terms are often used interchangeably but there are subtle differences:

- Chip: This is the most general term. It simply refers to the small piece of semiconductor material itself, before any circuitry is etched onto it.

- Integrated Circuit (IC): This is the precise technical term for the complex circuit design and its functionality on the chip. It describes the design and purpose of the electronic components.

- Computer Chip: This is a more specific term that refers to an IC designed for use in computers. Examples include microprocessors, memory chips, and graphics processing units (GPUs).

- AI Chips:

- Artificial intelligence (AI) chips are specially designed computer microchips used in the development of AI systems. Unlike other kinds of chips, AI chips are often built specifically to handle AI tasks, such as machine learning (ML), data analysis and natural language processing (NLP).

- Chips are made of silicon, a semiconductor material.

- According to The Economist, chipmakers on the island of Taiwan produce over 60% of the world’s semiconductors and more than 90% of its most advanced chips. Unfortunately, critical shortages and a fragile geopolitical situation are constraining growth.

- Nvidia, the world’s largest AI hardware and software company, relies almost exclusively on Taiwan Semiconductor Manufacturing Corporation (TSMC) for its most advanced AI chips.

- AI – Artificial Intelligence:

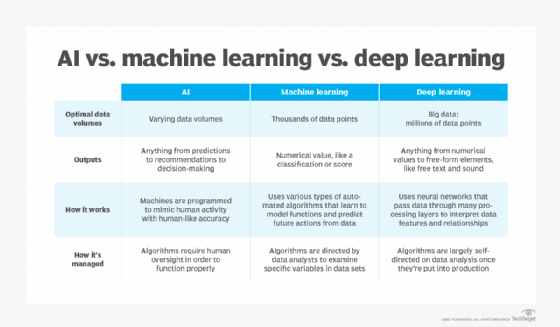

- Artificial intelligence is the simulation of human intelligence processes by machines, especially computer systems.

- As the hype around AI has accelerated, vendors have been scrambling to promote how their products and services use AI. Often what they refer to as AI is simply one component of AI, such as machine learning. AI requires a foundation of specialized hardware and software for writing and training machine learning algorithms. No one programming language is synonymous with AI, but several, including Python, R and Java, are popular.

- In general, AI systems work by ingesting large amounts of labeled training data, analyzing the data for correlations and patterns, and using these patterns to make predictions about future states. In this way, a chatbot that is fed examples of text chats can learn to produce lifelike exchanges with people, or an image recognition tool can learn to identify and describe objects in images by reviewing millions of examples.

- AI programming focuses on three cognitive skills: learning, reasoning and self-correction.

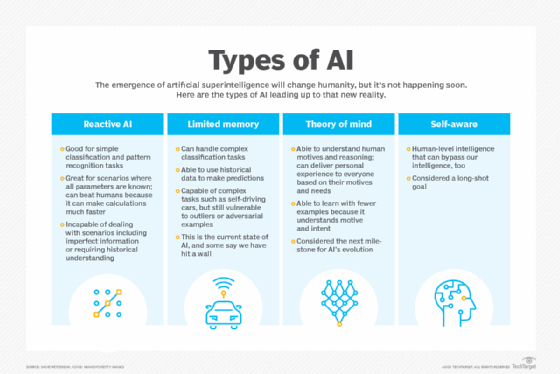

- The 4 types of artificial intelligence?

- Type 1: Reactive machines. These AI systems have no memory and are task specific. An example is Deep Blue, the IBM chess program that beat Garry Kasparov in the 1990s. Deep Blue can identify pieces on the chessboard and make predictions, but because it has no memory, it cannot use past experiences to inform future ones.

- Type 2: Limited memory. These AI systems have memory, so they can use past experiences to inform future decisions. Some of the decision-making functions in self-driving cars are designed this way.

- Type 3: Theory of mind. Theory of mind is a psychology term. When applied to AI, it means that the system would have the social intelligence to understand emotions. This type of AI will be able to infer human intentions and predict behavior, a necessary skill for AI systems to become integral members of human teams.

- Type 4: Self-awareness. In this category, AI systems have a sense of self, which gives them consciousness. Machines with self-awareness understand their own current state. This type of AI does not yet exist.

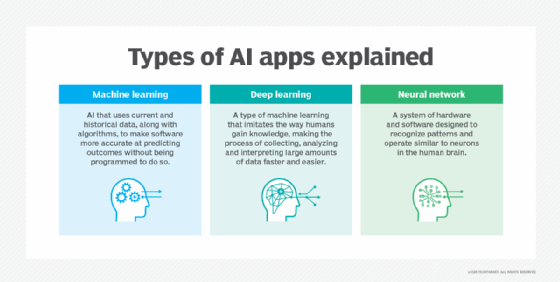

- Machine Learning (ML):

- Developed to mimic human intelligence, it lets the machines learn independently by ingesting vast amounts of data, statistics formulas and detecting patterns.

- ML allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so.

- ML algorithms use historical data as input to predict new output values.

- Recommendation engines are a common use case for ML. Other uses include fraud detection, spam filtering, business process automation (BPA) and predictive maintenance.

- Classical ML is often categorized by how an algorithm learns to become more accurate in its predictions. There are four basic approaches: supervised learning, unsupervised learning, semi-supervised learning and reinforcement learning.

- Deep Learning (DL):

- Subset of machine learning, Deep Learning enabled much smarter results than were originally possible with ML. Face recognition is a good example.

- DL makes use of layers of information processing, each gradually learning more and more complex representations of data. The early layers may learn about colors, the next ones about shapes, the following about combinations of those shapes, and finally actual objects. DL demonstrated a breakthrough in object recognition.

- DL is currently the most sophisticated AI architecture we have developed.

- Computer Vision (CV):

- Computer vision is a field of artificial intelligence that enables computers and systems to derive meaningful information from digital images, videos and other visual inputs — and take actions or make recommendations based on that information.

- The most well-known case of this today is Google’s Translate, which can take an image of anything — from menus to signboards — and convert it into text that the program then translates into the user’s native language.

- Machine Vision (MV):

- Machine Vision is the ability of a computer to see; it employs one or more video cameras, analog-to-digital conversion and digital signal processing. The resulting data goes to a computer or robot controller. Machine Vision is similar in complexity to Voice Recognition.

- MV uses the latest AI technologies to give industrial equipment the ability to see and analyze tasks in smart manufacturing, quality control, and worker safety.

- Computer Vision systems can gain valuable information from images, videos, and other visuals, whereas Machine Vision systems rely on the image captured by the system’s camera. Another difference is that Computer Vision systems are commonly used to extract and use as much data as possible about an object.

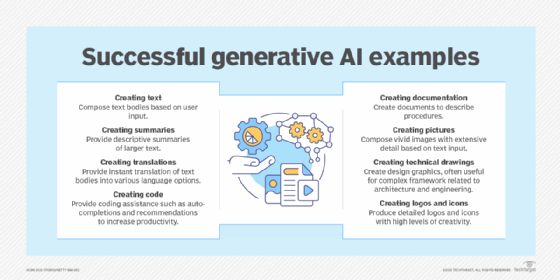

- Generative AI (GenAI):

- Generative AI technology generates outputs based on some kind of input – often a prompt supplied by a person. Some GenAI tools work in one medium, such as turning text inputs into text outputs, for example. With the public release of ChatGPT in late November 2022, the world at large was introduced to an AI app capable of creating text that sounded more authentic and less artificial than any previous generation of computer-crafted text.

- Edge AI Technology:

- Edge artificial intelligence refers to the deployment of AI algorithms and AI models directly on local edge devices such as sensors or Internet of Things (IoT) devices, which enables real-time data processing and analysis without constant reliance on cloud infrastructure.

- Simply stated, edge AI, or “AI on the edge“, refers to the combination of edge computing and artificial intelligence to execute machine learning tasks directly on interconnected edge devices. Edge computing allows for data to be stored close to the device location, and AI algorithms enable the data to be processed right on the network edge, with or without an internet connection. This facilitates the processing of data within milliseconds, providing real-time feedback.

- Self-driving cars, wearable devices, security cameras, and smart home appliances are among the technologies that leverage edge AI capabilities to promptly deliver users with real-time information when it is most essential.

- Multimodal Intelligence and Agents:

- Subset of artificial intelligence that integrates information from various modalities, such as text, images, audio, and video, to build more accurate and comprehensive AI models.

- Multimodal capabilities allows AI to interact with users in a more natural and intuitive way. It can see, hear and speak, which means that users can provide input and receive responses in a variety of ways.

- An AI agent is a computational entity designed to act independently. It performs specific tasks autonomously by making decisions based on its environment, inputs, and a predefined goal. What separates an AI agent from an AI model is the ability to act. There are many different kinds of agents such as reactive agents and proactive agents. Agents can also act in fixed and dynamic environments. Additionally, more sophisticated applications of agents involve utilizing agents to handle data in various formats, known as multimodal agents and deploying multiple agents to tackle complex problems.

- Small Language Models (SLM) and Large Language Models (LLM):

- Small Language Models (SLMs) are artificial intelligence (AI) models capable of processing, understanding and generating natural language content. As their name implies, SLMs are smaller in scale and scope than large language models (LLMs).

- LLM means Large Language Models — a type of machine learning/deep learning model that can perform a variety of natural language processing (NLP) and analysis tasks, including translating, classifying, and generating text; answering questions in a conversational manner; and identifying data patterns.

- For example, virtual assistants like Siri, Alexa, or Google Assistant use LLMs to process natural language queries and provide useful information or execute tasks such as setting reminders or controlling smart home devices.

- Agentic AI:

- Agentic AI is an artificial intelligence system that can accomplish a specific goal with limited supervision. It consists of AI agents—machine learning models that mimic human decision-making to solve problems in real time. In a multiagent system, each agent performs a specific subtask required to reach the goal and their efforts are coordinated through AI orchestration.

- Unlike traditional AI models, which operate within predefined constraints and require human intervention, agentic AI exhibits autonomy, goal-driven behavior and adaptability. The term “agentic” refers to these models’ agency, or, their capacity to act independently and purposefully.

- Agentic AI builds on generative AI (gen AI) techniques by using large language models (LLMs) to function in dynamic environments. While generative models focus on creating content based on learned patterns, agentic AI extends this capability by applying generative outputs toward specific goals.

- High-Density AI:

- High-density AI refers to the concentration of AI computing power and storage within a compact physical space, often found in specialized data centers. This approach allows for increased computational capacity, faster training times, and the ability to handle complex simulations that would be impossible with traditional infrastructure.

- Intellectual Property (IP):

- Intellectual property (IP) refers to creations of the mind, such as inventions; literary and artistic works; designs; and symbols, names and images used in commerce.

- IP is protected in law by, for example, patents, copyright and trademarks, which enable people to earn recognition or financial benefit from what they invent or create. By striking the right balance between the interests of innovators and the wider public interest, the IP system aims to foster an environment in which creativity and innovation can flourish.